Safe Superintelligence (SSI), an A.I. startup launched by OpenAI’s former chief scientist Ilya Sutskever in June, has already raised a staggering $1 billion in venture funding despite having only ten employees, the company announced on its website yesterday (Sept. 4). SSI was co-founded by Sutskever, Daniel Gross, a former Y Combinator partner who previously led A.I. efforts at Apple, and Daniel Levy, who worked alongside Sutskever at OpenAI. The startup is currently developing artificial general intelligence, or AGI, while retaining a focus on safety.

NFDG, a venture capital firm run by Gross and Nat Friedman, participated in SSI’s fundraising alongside Andreessen Horowitz, Sequoia Capital, DST Global and SV Angel. The startup is now valued at $5 billion, according to Reuters, which cited sources familiar with the matter.

The funds will be partially earmarked for hiring at SSI, which says it is “assembling a lean, cracked team of the world’s best engineers and researchers dedicated to focusing on SSI and nothing else.” The company is looking to fill positions across data, hardware, machine learning and systems, according to SSI’s job application form. SSI will emphasize “good character” and extraordinary abilities over experience and credentials, Gross told Reuters, adding that the startup plans to spend the next few years devoted to research and development. The company currently has only ten employees spread between its two offices in Palo Alto, Calif. and Tel Aviv, Israel.

SSI’s investments will also go towards building up computing power, although the company has yet to partner with any particular cloud providers or chipmakers. Sutskever told Reuters that the startup’s approach to scaling will differ from that of OpenAI but did not specify how. He also noted that, although SSI will not open-source its primary work yet, there will hopefully “be many opportunities to open-source relevant superintelligence safety work.”

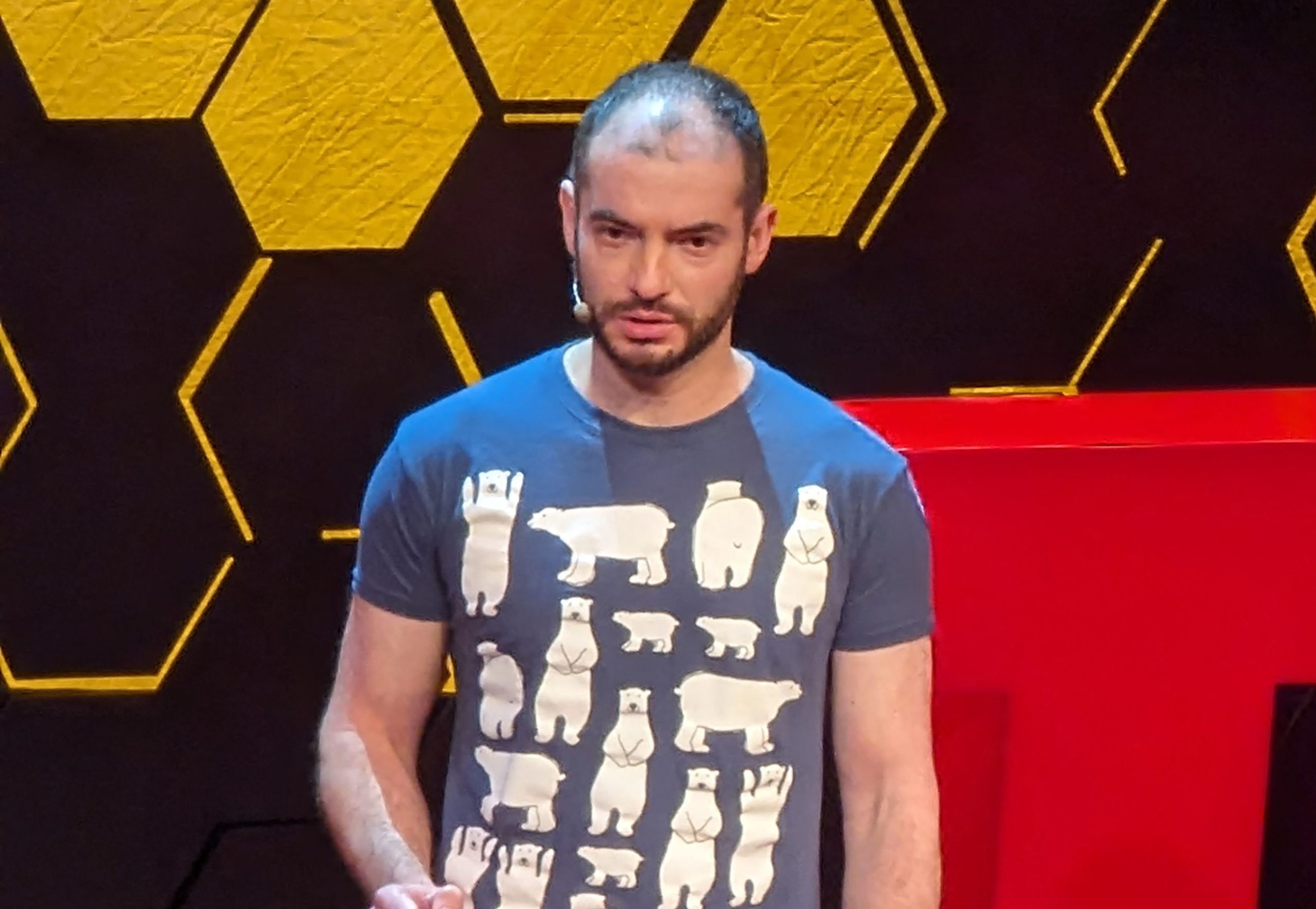

Sutskever, 37, joined Google in 2013 after completing a Ph.D. under the A.I. academic Geoffrey Hinton at the University of Toronto. He went on to co-found OpenAI in 2015 and served as its chief scientist until May of this year. A safety team co-led by Sutskever that oversaw A.I.’s existential risks was disbanded shortly after his departure.

Sutskever was a key member of the four-person OpenAI board that briefly ousted the company’s CEO, Sam Altman, last year before the executive was reinstated following pushback from investors and employees. Sutskever said he regretted his involvement in the firing and was subsequently pushed out of the OpenAI board. The ousting was made possible due to OpenAI’s unique corporate structure: originally founded as a nonprofit, the company is overseen by an independent nonprofit board and has a capped-profit arm. SSI, meanwhile, has a traditional for-profit structure.

Despite his previous struggles within OpenAI, Sutskever told Reuters he has “a very high opinion about the industry” and safety efforts of other A.I. companies. “I think that as people continue to make progress, all the different companies will realize—maybe at slightly different times—the nature of the challenge that they’re facing.”