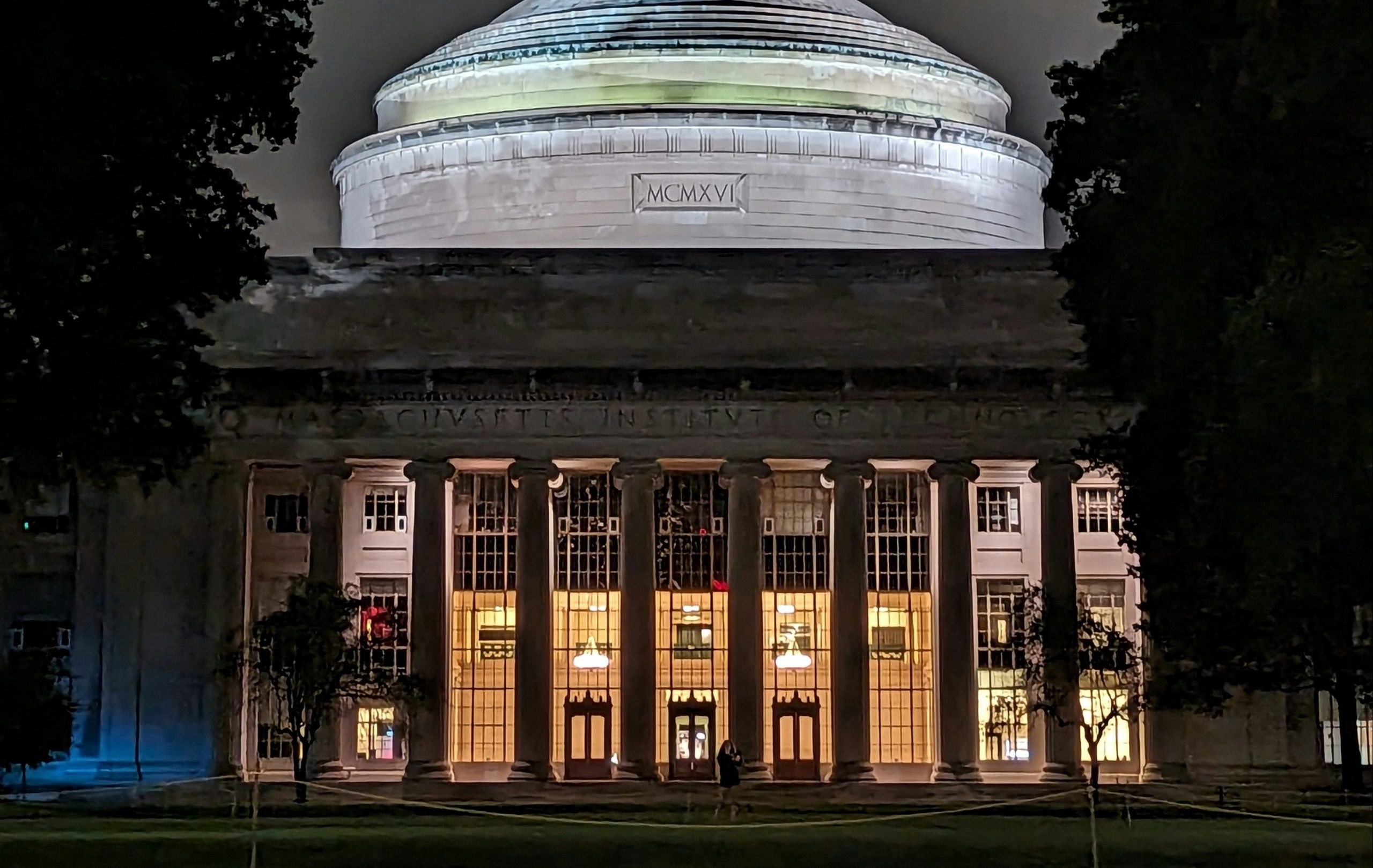

Curiosity around A.I. is on the rise, and numbers reflect that. The most recent Census data shows the use of A.I. for business purposes rose to 5.4 percent from 3.7 percent in a five-month stretch this year. But so far, the risk associated with the emerging technology is a looming beast that has remained opaque to everyone, from average ChatGPT users to high-level decision-makers. A special project by MIT might finally change that. This week, the FutureTech Research Project at the Massachusetts Institute of Technology’s Computer Science & Artificial Intelligence Laboratory (CSAIL) released the AI Risk Repository, a comprehensive and searchable database that outlines more than 700 risks associated with A.I.—whether caused by humans or machines.

“We want to understand how organizations respond to the risks of artificial intelligence,” Peter Slattery, a visiting researcher at FutureTech and the project’s research lead, told Observer. However, in trying to find a complete framework of risks, the group fell short. That’s when Slattery realized, “This seems like a bigger contribution and maybe more important than we realized.”

Through a systematic review backed by a machine learning process called active learning, FutureTech sifted through more than 17,000 records and engaged relevant experts to identify more than 700 risks, which researchers further organized into various categories to make the repository easier to use.

What’s in the database?

The risks are grouped into seven domains: discrimination and toxicity; privacy and security; misinformation; malicious actors and misuse; human-computer interaction; socioeconomic and environmental harms; and A.I. system safety, failures and limitations. More specifically, the risks fall into 23 subdomains, some of which are exposure to toxic content, system security vulnerability, false or misleading information, weapon development, loss of human agency, decline in employment and lack of transparency. The FutureTech project is supported by grants from Open Philanthropy, the National Science Foundation, Accenture, IBM and MIT.

FutureTech said the database’s content could change over time (and the group welcomes feedback, even including a form on its site). However, Neil Thompson, director of FutureTech and a research scientist at MIT Initiative on the Digital Economy, told the Observer, “I think we’re hopeful that it will have a shelf life that lasts us a little while.”

While similar databases exist, none are nearly as comprehensive. FutureTech analyzed existing frameworks, including those from Robust Intelligence, MITRE and AVID, which only managed to include, on average, 34 percent of the risks it identified. Thompson said the lightning-fast growth of A.I. has contributed to people having a fragmented view of the technology. “Establishing this gives us a much more unified view,” he said.

The database is accessible to everyone but most useful to policymakers, risk evaluators, academics and industry professionals. FutureTech Leaders intend to grow the repository and enhance its applicability over time. In fact, the repository is en route to quantifying A.I. risks or assessing the riskiness of a particular tool or model, which Thompson said is the goal in the coming months.